Data transformation is a really big deal. If you work in the field of data, in any capacity, you know this.

Pristine data sets that are ready for human interpretation don’t just fall out of the sky. They come with warts. We need to deduplicate, remove nulls, format dates, rename columns, reshape tables, join with other data sources — the list goes on and on.

Before we can perform that posh analysis, we must deal with an overwhelming amount of data transformation, and this is as true for the budding young data team as it is for the omnipresent FAANG company.

After all, the goal of any data practitioner is ultimately to create and disseminate organizational knowledge. This is, in fact, the mission statement of dbt Labs and serves as an inspirational reminder of what we’re really trying to accomplish.

👉 Hold up — what is dbt?

For those who are unfamiliar with dbt (data build tool), it's a tool that gives data analysts superpowers for transforming data in the warehouse. Using simpleSELECTstatements, you can create production-grade transformation pipelines that are documented, tested, and standardized. It's the industry standard tool for data transformation.

But where does organizational knowledge live?

In order to create and disseminate knowledge, we need to know where that knowledge lives. After all, as wonderful as dbt is, it won’t capture everything, right? Just think of all those reclusive data transformations that contain organizational knowledge but stubbornly live outside of dbt’s walled garden (and maybe for good reason).

Grab a coffee and join us as we dig into the dark and forgotten corners of our stack, looking for nuggets of valuable organizational knowledge. Let’s think about how we use that knowledge and how it relates to dbt. Yes, this includes Excel files sitting on Finance Bill’s desktop (more on him later).

👉 Want to dig deeper?

Check out this panel discussion where we bat around these same ideas at Coalesce along with Benji Knights Johnson (Senior AE at DAZN) and Nils Werner (Data Engineer at Eoch Group).

Do you see it? It’s knowledge outside of dbt!

We naturally think of data transformation as synonymous with what dbt does — you know, the tested, documented, and GitHub-ed types of transformations. But a heck of a lot of organizational knowledge is contained (stuck?) in the wild west of legacy systems, notebooks, and Excel files (as depicted in the cover image to this blog post).

Let’s try to understand the reason for these types of data transformations, the value they bring to the organization, and their relationship with dbt. The hope is that data teams can develop a better understanding of the trade-offs between dbt and other knowledge-containing data systems. And, of course, you will experience the inevitable “smells” of transformations that really should be handled with dbt.

Legacy systems

Legacy systems, AKA the cursed data stack™. If you’ve ever been part of an organization that’s been around for a while, you’ve likely dealt with legacy systems (COBOL, anyone?). Companies that existed before dbt had to make hard choices about their data transformation stack. Once brilliant, bespoke solutions can ultimately become insidious implementations.

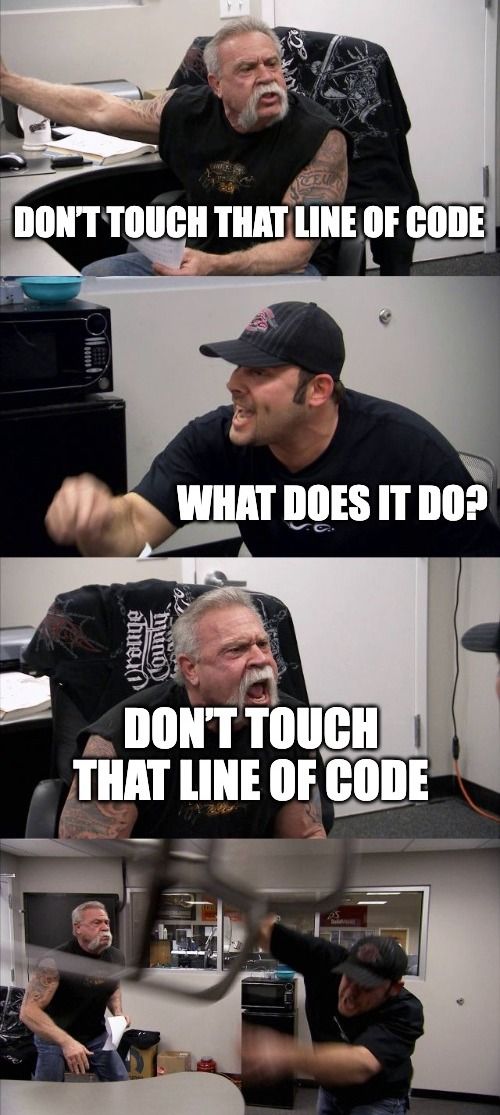

We’ve seen some real cursed data stacks in our day. Consider a fictitious company that finds itself mired in tech debt despite its best laid plans, inevitably ending up in this situation:

Right, so I guess no one knows what that line of code does, but it most certainly makes money. And chances are that you know a line of code like that, too! All this is to say that pre-dbt transformation approaches are more common than we’d like to admit and convincing business stakeholders to see the “dbt light” is a big ask if money is already being made.

Appeal to time & money

When the struggle with legacy systems becomes too “smelly,” practitioners will naturally want to move to newer tools in the modern data stack, such as dbt. But what can we do as data practitioners to move the needle toward new approaches?

Design a prototype of how things would be if the company adopted modern tools (RIP, normal working hours). The key is to translate your suggestions into the universal languages of time and money. Quantify the impact of deprecating complex and hard-to-maintain technologies.

For example, demonstrate to business stakeholders that, since time is money, you will be saving them $X since developers won’t be spending Y hours on the maintenance of legacy systems. That’s one way to sell the modern data stack to business stakeholders. If you can make a case from that perspective, you might just move the needle.

Notebooks

Notebooks are interesting entities when it comes to data transformation. One can characterize a notebook workflow as an agile and chaotic exploratory data journey involving multiple languages and often finishing in the form of a narrated story.

Because of their literate style and reach into limitless tools and domains, notebooks naturally contain massive amounts of organizational knowledge and are interwoven throughout the data stack in surprising ways:

- Notebooks function as pseudo-BI tools, undergirded by the dbt models themselves and transforming data for the last mile of knowledge dissemination.

- Transformations in notebooks can also come in the form of ad-hoc analyses. These data transformations probably don’t end up in dbt, but someone should probably check on this!

- Notebooks serve as model prototyping interfaces before a “lift and shift” into dbt — a symbiotic relationship between chaos and order (more on this later).

Going back to the analogy suggested by the image above, notebooks are chameleons in the data stack. They perform many roles both near the dbt border and far outside of it. And now, with Python models in dbt, organizational knowledge is sure to continue to accumulate in notebooks.

Excel

Excel is the most successful data transformation tool in the world (nervous smile). Okay, maybe pervasive is a better word, but you get the point. Nearly everyone knows how to use it, the barrier to start using it is low, and it’s got a high ceiling if you are a wizard.

If our mission is to empower data practitioners to create and disseminate organizational knowledge, can we stop for a second to appreciate just how much knowledge is contained in Excel? You know the file I’m talking about. The one that’s sitting on Finance Bill’s desktop, suspiciously close to the Windows recycling bin. That’s the one. Millions of dollars lost if there’s a bad mouse slip one day.

All jokes aside, Excel is the data dialect that businesses speak, for better or for worse. In our panel discussion, we considered the organizational knowledge in Excel, grimaced, and then imagined a world where dbt and Excel could interact.

After all, the boundary that separates dbt transformations from the rest is expanding. It now encompasses metrics and Python. It’s coming for the rest if this trend continues. So why not an Excel-dbt connector? Imagine a world where respectable Excel citizens are brought into the dbt fold. For example:

“Hi, Finance Bill. This Excel table is well made! Your recent transformations can be handled by our analytics engineers so you never have to worry about that mouse slip. Click here to save yourself with dbt (or some other alternative)."

If Excel is how businesses speak, an Excel-dbt connector would be an essential tool in diplomacy between finance and data teams. Someone should create this tool and make millions.

The boundary between dbt and chaos

The workflow of using dbt feels so explicit — so CLI, so SQL (mostly). It’s perfectly standardized, encourages software engineering best practices, and generally keeps us safe from ourselves. On the other end of this spectrum, we see the rebels, the outcasts, the wayward data transformations in legacy systems, notebooks, and spreadsheets.

Perhaps the real value in this thought experiment is to begin to appreciate that some forms of non-dbt transformations are perfectly healthy and necessary. They are the complimentary chaos to our desire for standardization (and periodic dip into over-engineering). They are the dots in the yin-yang symbol. The step toward the dangerous but exciting wilderness that we all need.

Without these types of data transformations, our stacks might be more organized, but we’d be sluggish in our ability to create and disseminate knowledge quickly. It’s actually the interaction between dbt’s border and everything outside of it that allows data knowledge to thrive — some knowledge becoming codified into dbt, some being left to run wild, and some being passed back and forth via integrations and interfaces.

So, the next time you hear the term “data transformation,” let’s stop to salute all those transformations that don’t fit into a framework but nevertheless make the world go round. We need them, too.

Appendix of random thoughts

- In computer programming, a code smell is any characteristic in the source code of a program that possibly indicates a deeper problem (copied directly from Wikipedia).

- Coined by Katie Bauer during Coalesce. Resulted in swag. Triggered interest in purchasing curseddatastack.com.

- As Nils pointed out in the panel discussion, that line of code makes money. Don’t even breathe on it!

- Oh, so all we need to do is build a new data stack on top of maintaining the old one. That’s a tall order!

- Wait, are you the Excel person in your company? If so, the wizard competition is here.

- “Benji is our AE (Analytics Engineer) and our EA (Excel Assassin). Benji will come for you. You can

dbt runbut you can’tdbt hide.”