Not another chat bot!

The path not taken

In the previous entry in this series, we discussed why we deliberately chose code completion as the first use case to solve with generative AI. Having seen the great success of that feature, we then turned our full attention to the question: how can we integrate conversational AI features into our notebook experience that makes sense for our users?

The emphasis on the last part is critical. While the success of ChatGPT and the Code Interpreter plugin (now known as Data Analyst) prompted many in the data tool space to hastily incorporate chatbot functionalities, most of these implementations amounted to little more than glorified wrappers around the OpenAI API. Others explored the plugin approach, attempting to simplify access to specific features through ChatGPT.

For us, neither approach seemed suitable. Why?

The limits of a chat window

On the one hand, ChatGPT demonstrated that conversations are a great mechanism to interact with data, one that felt natural for users to pick up. It also showed that the combination of natural language, code and visualizations can all nicely fit into this conversational flow.

On the other hand, it also exposed serious limitations. The analytical flow becomes too confined when forced into a chat thread format, because all outputs are crammed into singular entries. This complicates the process of iterating or making adjustments to individual components, such as modifying a section of code or altering a chart's settings. Such flexibility is vital for efficiently reaching analytical conclusions and for clearly illustrating the path to those findings. Additionally, a chat thread is an exhaustive, unedited log of all actions taken, which is cumbersome to navigate once the analysis is complete. In contrast, an edited notebook is significantly more user-friendly, offering greater opportunities for interaction and clarification.

Another significant issue with chat interfaces was the lack of transparency, with details of code execution being hidden by default and missing crucial basic information. Moreover, the challenge of reproducibility loomed large: given the non-deterministic nature of generative AI outputs, it was impossible to ensure that the same analytical results would be generated twice.

There were numerous other technical limitations as well (which we detailed in this article), but the key take-away for us was that all these problems were intrinsic to the chat interface itself: a linear thread format where entries are supposed to be more or less immutable.

As a notebook company, we believed we had something better on our hands.

💡 We were arriving at the bold view that notebooks might just be the perfect medium to incorporate conversational AI help for data work in a way that still allows for a flexible, transparent and reproducible analytical flow. That is, if we can crack the user experience.

This insight guided us towards two core principles that shaped our design strategy:

- The experience must be entirely integrated within our product—investing in GPT plugins is futile. Notebooks offer a superior format for both conducting and presenting data analysis.

- We will not develop a generic chatbot. The AI assistance needs to be immediately actionable, in-context and baked right into the analytical flow itself.

Keeping it simple, shipping early

Okay, so we had our starting vision (sort of), but the bigger part of the challenge still remained. What and how are we going to ship first?

Things were moving insanely fast. New models, capabilities and concepts (GPT3.5 Turbo, GPT-4 in preview, model fine-tuning, plugins, function calling, agents, retrieval augmentation etc.) were cropping up faster than we could possibly turn them into tried and tested features. To avoid getting stuck, we had to draw some sensible boundaries for ourselves and focus on one thing at a time. So we made a few important decisions.

We will not turn into an AI model/tooling company. Our strengths are rooted in providing a superior user experience via the notebook structure and leveraging the rich context present in our customers' workspaces. We opted to utilize the most advanced models available from OpenAI, refraining from developing custom models, engaging in fine-tuning, or crafting supporting functionality in-house (though some of this latter became necessary eventually, we aimed to minimize it as much as possible).

We will ship early and aggressively iterate. There was no way we were going get this right out of the gate. This was uncharted territory with generative AI representing a new type of UX language. We discarded “chatbot” from the vocabulary, which helped, but this language is still being written. We didn’t want to keep things in prolonged Beta, because we were afraid these will end up turning into features in infinite limbo. We needed real, everyday interactions from our customers to inform our path forward. Luckily, we were convinced that it’s a long term transformative journey for us, which eased the pressure on our first few releases.

We will focus on some of the most proven, basic problems and build from there. Addressing core customer issues such as code generation and bug fixing offered a more practical foundation than pursuing more eye-catching features like automated analysis or AI-driven visualizations. We recognized the importance of reaching those milestones, but understood the necessity of learning alongside our customers. The most effective way to gain insights and engage in meaningful dialogue was to deliver value, even if it initially took the form of a very rough diamond.

From early adoption to mainstream usage

In July last year, we shipped a simple AI assistant that was capable of generating or editing a Python block. The feature was not easy to find; the prompt interface was tiny; the generation was slowed down by long explanatory texts; the scope was limited to a single block at a time; and the intelligence of the AI was limited, failing to leverage the wealth of context available within your notebook.

How did it perform? Initially, there was a wave of enthusiasm among our customers, with some adopting the new feature quickly. However, the overall reach and impact were moderate at best. Following an initial surge of interest, only about 16% of our customer base engaged with this feature on a weekly basis. This was far from the groundbreaking AI revolution we had envisioned.

We didn’t lose hope, however, as we knew we were just laying the foundations. In the 8 months that followed, we kept iterating, releasing new features, learning from both failures and successes, and upping our knowledge of the AI game in general.

As a result, we now have an ever-present AI collaborator capable of autonomously carrying out complete analytical projects. It can create multiple blocks and self-correct its way to the final results. While doing so, it remains fully aware of your notebook's content, the outputs of block executions, runtime variables, attached file names, and SQL integration schemas. It even provides prompt suggestions, sparing you the effort of crafting instructions from scratch.

Looks pretty sweet, but has this moved the needle and truly made a difference? Do our customers sense a revolutionary shift in their daily tasks?

The answer to both questions is a resounding yes!

Engagement with our AI features has more than tripled in the past 8 months. Currently, more than 52% percent of our paying customers use AI features on a weekly basis.

It’s not a temporary flare-up of interest either. It’s widely known that the demo candy type of AI features (those that don’t address and solve critical user problems) suffer from an ugly retention problem. In contrast, Deepnote AI is really at the core of the notebook experience, reflected in its long-term, healthy feature retention.

Furthermore, it's not just about the quantity of users; the depth of engagement is also on the rise. On average, our users turn to AI assistance more than 24 times a week.

What’s even more telling is the customer stories we keep hearing. At some point during these 8 months, Deepnote AI evolved beyond being just a productivity tool and became a genuine enabler, making data notebooks accessible to an entirely new audience. Individuals who were curious about data, but typically shied away from notebooks and Python, now use Deepnote AI as a way to run analytical projects on their own, while also learning the tools of the trade at the same time. As one of our customers put it:

I have been a consumer of BI reports for over 15 years. But there’s a great leap between consuming a report and the using the underlying technology. Deepnote AI massively helped in bridging this gap and helped me to learn. It made coding so much more efficient, I cannot imagine how long it would have taken without it. Sometimes I actually felt like cheating, wasn’t sure I would actually learn this way. But I realized, it’s not taking away from learning, it just saves time on my own terms.

Even internally within Deepnote, we saw several signs of this radical empowerment. One of the testing rituals we have is what we call dogfooding exercises: these are regularly held data challenges that everyone in the company, regardless of the job title or position, has to try and solve with Deepnote.

💡 When we observed our sales team successfully completing complex tasks such as data analysis, churn modeling, or even building data apps in less than an hour, we realized we had tapped into something big. The term 'magic' started to frequently appear in customer feedback.

But how did we get here from that humble, early first release?

Moving forward on 6 fronts

We found that producing these results required us continuously improving on at least 6 different dimensions.

- Solving more problems

- Improving discoverability

- Providing more context

- Expanding the range of AI actions

- Refining the user flow

- Prompt engineering

We will discuss each of these aspects separately.

Solving more problems

We initially focused on the basic use cases of code generation and editing. However, we recognized that generative AI could address a far broader spectrum of challenges for our customers. While we had a ton of ideas, we remained relatively conservative and selected problems based on their assessed practical importance. Addressing one of the most frustrating aspects of coding—bug fixing—seemed like the perfect opportunity to further enhance the assistance provided by our AI.

We added a ‘Fix with Deepnote AI’ button to our error states and AI engagement more than doubled practically overnight! This was one of the biggest early wins for us, but as the gradually declining engagement over the next few weeks demonstrated, there were still numerous refinements needed to to make it really useful for our customers (see more on that below).

Discoverability

Given the widespread enthusiasm for generative AI, it might have been tempting to assume that our AI button would instantly attract attention, regardless of its placement. We included a 'Generate with Deepnote AI' placeholder in our most popular block (the code block), and there was also a button in one of the most frequently used functions in Deepnote—the block sidebar actions.

Yet, despite these efforts, it became apparent that many users were unaware of the breadth of AI capabilities within our product. We recognized the need to prioritize discoverability. We experimented with AI-focused onboarding flows and introduced an AI button in a prominent location within our UI: the notebook footer. While the latter resulted in significant improvements (with over a 25% relative increase), we still sensed unnecessary friction in accessing AI features. Ultimately, we opted to consistently present the AI prompt at the bottom of the notebook. Although there is always room for improvement in terms of discoverability, this marked an important milestone for us, signifying to our users that Deepnote AI now lies at the heart of the notebook experience.

More context

An AI assistant’s usefulness is made or broken by its ability to understand and respond to user needs accurately and efficiently. What this requires is giving the right context to it: simply put, the AI needs to see what you see, know what you know. It needs to understand the purpose of the project, the data that you’re working with, the variables that you produced, the approaches that you tried in your analysis so far and other relevant projects from across your workspace.

Without this contextual awareness, the experience is kind of broken, which erodes user trust and severely limits the practical value of the feature. This is something that was immediately picked upon by our users, noting the annoyance of having to spell out things for the AI. Compared to ChatGPT, it felt jarring, hence many of our users still kept a tab open on ChatGPT while using Deepnote. If we wanted to live up to our vision, we had to fix this and quickly.

This is a hard problem to solve. There are all sorts of technical limitations we have to juggle with. One of them is the size of context windows: despite the exponential growth of token limits over the past year, we still cannot just shove all the data we have and expect great results. Even when we can fit all information in, the model’s recall is far from being perfect and we have to do a lot of prioritization and manual sorting on our end. Pricing and speed are other important factors as well.

Despite the hurdles, we steadily achieved notable advancements in Deepnote AI's contextual understanding. We began by addressing the fundamentals, such as the title and notebook content, before incorporating other valuable details like runtime variable schemas and filenames. While these enhancements were beneficial, they primarily comprised metadata. The next bigger leap was adding block outputs to the context. This essentially enabled the AI to form an idea of the data you’re working with, which made its suggestions a lot more tailored and powerful.

The most telling measure of the impact of these improvements was the declining use of ChatGPT, indicating that Deepnote AI was evolving into a more user-friendly tool for our customers' workflows. As expressed by one of our customers:

I’m a huge fan. In the beginning I still copied code to ChatGPT, but lately there have been improvements and I don’t do that anymore. We use some BI tools, but they want to charge so much to have a ChatGPT prompt that doesn’t do anything versus Deepnote, where it’s AI is built into right into the notebook which is what I need.

At every major step of context expansion, we made sure that the user has control over the privacy of their data. We implemented settings that empowered users to opt out of sharing block outputs or disable various AI features entirely. Moving forward, we remain committed to prioritizing data privacy as we continue to enhance the AI's contextual awareness.

Range of actions

A lot of generative AI solutions are essentially polite answering machines. You ask a question, they come back with answers and suggestions. This is already useful, of course, but Deepnote is a tool for getting things done. We wanted to convert Deepnote AI from a code suggestion writer to a doer, an actual collaborator. This is what AI agents are all about and we saw a prime opportunity for Deepnote AI to utilize the same approach.

Achieving this involved significantly expanding the range of AI actions. Rather than generating a single block, the AI was now tasked with creating multiple blocks of various types (code and text included). With this capability in place, our users could initiate their projects and even generate entire notebooks from a single prompt. However, a critical component was still absent: the AI's ability to autonomously execute its own code and learn from its errors. This capability was introduced through Auto AI—an agent-like collaborator capable of executing blocks, interpreting data outputs, and self-correcting errors as they arise.

Rolling out this tool was a somewhat daring move, and internally, we grappled with numerous uncertainties. Would our users be comfortable relinquishing control to the AI and allowing it to execute blocks? Would it prove reliable enough for users to patiently await its execution plan?

As it turned out, our users displayed even more courage in embracing AI agents than we initially anticipated! Within just a month, Auto AI emerged as the predominant form of AI assistance, surpassing even Fix in popularity.

From the outset, we had plans for lot of exciting capabilities we envisioned bringing into Auto AI. Seeing these results massively increased our confidence in pursuing those future investments.

Flow refinements

Although using generative AI can often feel special, when it comes to product work, these features are not unique snowflakes at all. Yes, the technology is new and fancy, and the potential applications are novel, but in the end, the good old product principles still apply. If customers cannot easily derive tangible value from AI features, the best-case scenario may involve an initial surge of interest followed by rapid abandonment. We saw this pattern clearly in our data with all of our early releases: a big bump in engagement followed by a significant drop over the coming weeks.

While the improvements we made across other fronts proved beneficial, but they had a natural ceiling. What we needed was a better, more polished user experience so that customers can really take advantage of technical upgrades such as richer context. Regardless of the instrumentation we implemented, the most effective approach for navigating these challenges still remained continuous product discovery: talking to our customers.

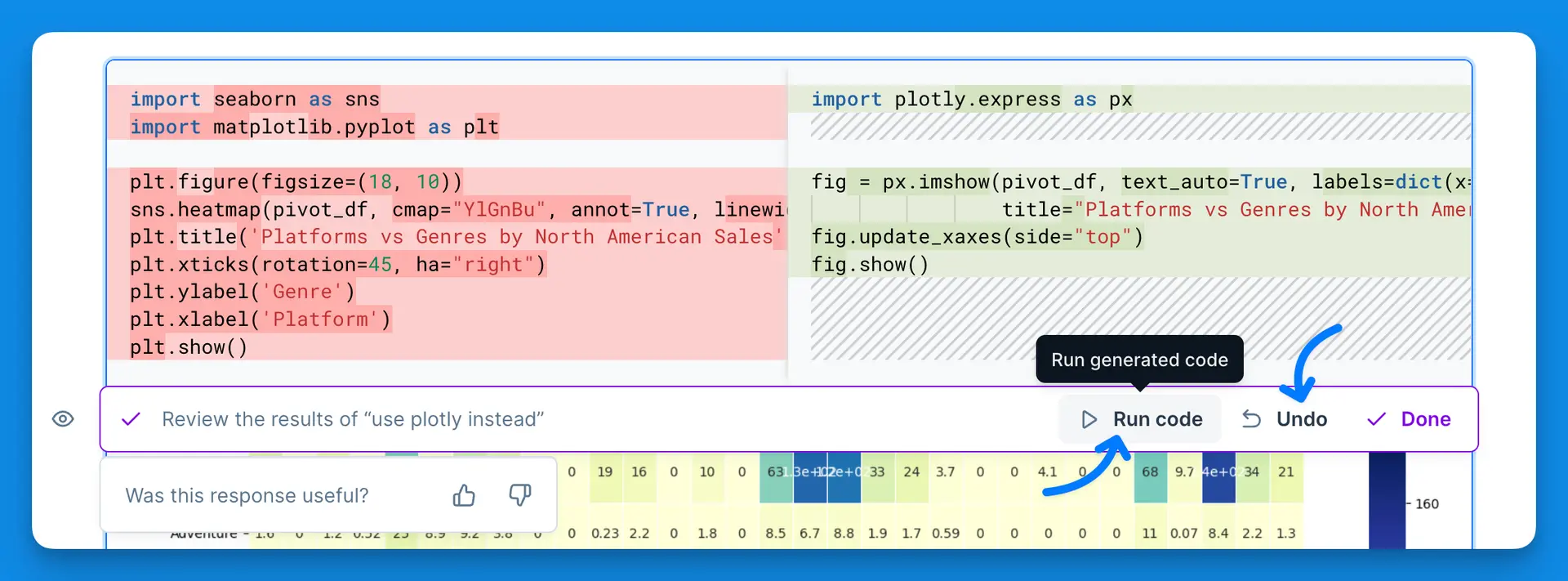

Oh boy, did we we encounter numerous surprising and nuanced insights from these chats! One particularly illuminating example arose from our AI Edit flow. Upon requesting an AI edit on their code block, users were presented with a diff view comparing the old code to the suggested changes, allowing them to accept or reject the suggestion. This feature received significant positive feedback, leading us to believe it was doing great. However, we discovered that the vast majority of users didn't actually read the code changes; instead, they simply wanted to run it immediately to observe the results. Once again, we were pleasantly surprised by our users' confidence in AI!

In response to this feedback, we took action. Two key adjustments were required: first, the suggested code needed to be written directly into the notebook for immediate execution; second, we implemented a "Run code" button to facilitate this workflow.

Once released, it became an instant hit, with a whopping 40% of all AI edits culminating in users clicking that button. It's striking how a simple change can yield such significant results, but it just goes to show—the best insights often stem from good old-fashioned product legwork.

Prompt engineering

No surprises here: working with generative AI requires mastering the art of prompt engineering. While this is a complex topic better suited for my engineer colleagues to delve into, I'll say this much from a product perspective: it's impossible to overstate the level of frustration and trial-and-error exercises that can accompany the release of a new generative AI capability. Prompt engineering is like a black hole, and if you're not careful, it can devour an infinite amount of work.

So how did we deal this problem?

- We adjusted our expectations. While it may sound discouraging, embracing the reality that perfection isn't attainable allowed us to release with a mindset of continuous learning and improvement, recognizing that things won't be flawless—far from it.

- We invested in developing comprehensive prompt evaluation suites. Initially, we relied heavily on manual eyeballing tests to gauge the impact of prompt changes. However, we soon realized that this approach was unsustainable. While there are many tools available that specialize in this area, they may not fully address all of our needs. Therefore, it's essential to incorporate this aspect into our planning when embarking on AI initiatives.

Challenges abound, but prompt engineering remains vital and will continue to be a key investment area for us moving forward.

Where do we go from here?

Looking back at our journey with Deepnote AI, it's clear that we've delivered a great deal in the past 8 months. The quantitative and qualitative feedback we've received suggests that we're not just making iterative improvements—Deepnote AI is truly a game-changer for many of our users. However, it's important to recognize that what we have today is just a small part of our AI vision. So, let me paint a picture for you.

Your business stakeholder has a spur-of-the-moment question they're itching to explore. Instead of pinging her already swamped data analyst, she knows she can rely on Deepnote for a quick answer. She logs in, types her query into the search bar, and voila! Deepnote AI swiftly combs through all the relevant content in the workspace, offering up concise summaries based on existing work. Satisfied with the response but intrigued by another aspect of the question, she poses a follow-up query. This time, Deepnote AI doesn't find existing answers, so it kicks into action, firing up a notebook to tackle the problem head-on and promising to notify the stakeholder soon with the results.

As Deepnote AI dives into the project, it seamlessly leverages all the native tools within the notebook—integrations, SQL blocks, Python scripts, chart blocks, you name it. Once the analysis is complete, a new App is automatically generated, and a Slack notification pings the stakeholder, who simply clicks to open the report and delve into the insights.

This is the future we're building. But don't just take my word for it—check out our product roadmap, where many components are already lined up to make this vision a reality. While you're there, feel free to share your thoughts, suggest new ideas, or vote on existing ones—we'd love to hear from you!

Summary

As we wrap up this mammoth of an article, let's quickly recap the most important lessons we've learned while building Deepnote AI.

- Generative AI features are still normal products, the hype doesn’t rewrite the basic product rules. You need to focus on solid user problems and don’t invent solutions for non-existing ones.

- The AI race is won in UX and not in pricing, models or other supportive tech (eg. retrieval augmented generation). When it comes to superior UX, the in-context, immediately actionable and deep integrations always beat general ones (chat bot). Beyond these general principles, however, this is new territory and any interface needs to be constantly refined based on nuanced understanding of how users interact with AI.

- Context is absolute king when working with generative AI features. Letting the AI know what you know can transform a ‘meh’ experience into a feeling of magic. Continuously seeking opportunities to incorporate more relevant context is key, but it's essential to do so in a privacy-conscious manner.

- Iterative releases is the way to go. There is no time to wait for the perfect product release, there’s just way too much uncertainty. It’s better to give value to customers early and learn together with them. They may surprise you with their boldness! Keep providing more prominent surface areas for AI features while also making AI more of a doer by increasing the range of actions it can perform. On the technical side, invest in more systematic prompt engineering, while making sensible compromises.

- Generating initial upswings in user interest is relatively straightforward, but sustaining engagement over time is challenging. You need to fight for every percentage point increase in engagement, which requires making gradual improvements across multiple fronts.