At Deepnote, we think of the notebook as a universal computational medium. It’s easy to get started with, but allows for great composability. As a result, the notebook can serve not just as a tool for data exploration, but also as an elegant building block for a company’s entire data platform.

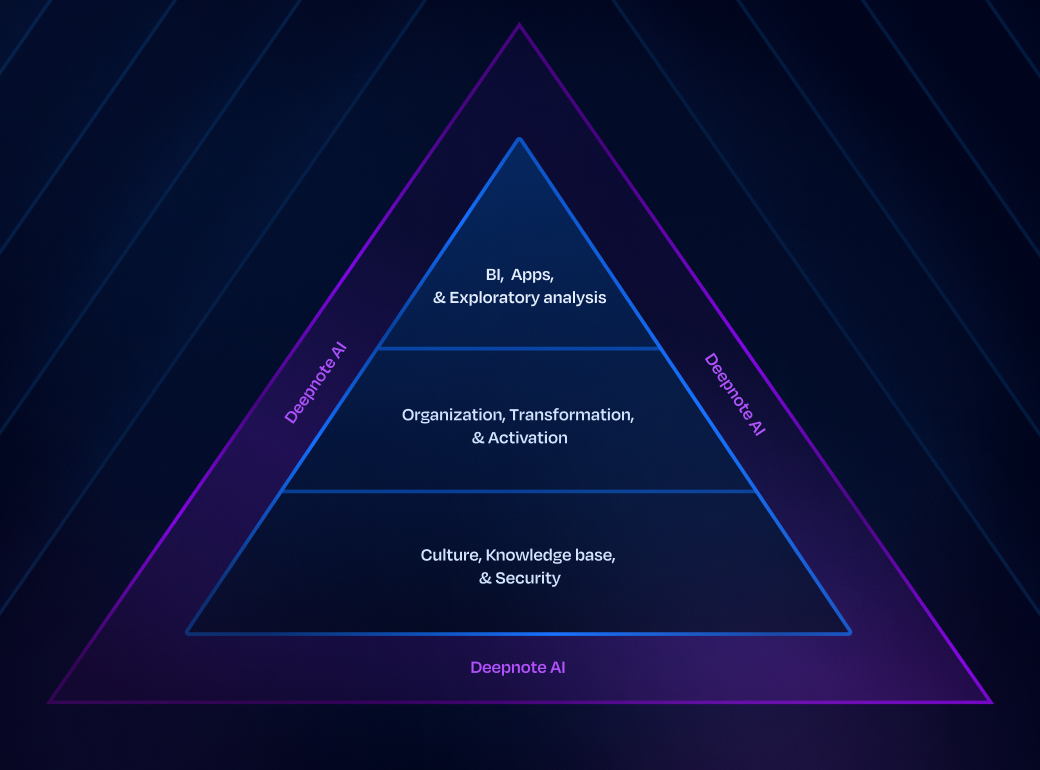

In this post, we’ll explore how we at Deepnote use Deepnote to power our entire data infrastructure, which we like to think is organized as a pyramid structure.

Read on to learn how we use Deepnote:

- To build our collaborative culture, knowledge base and security model

- To organize, transform and activate our data

- To perform business intelligence, exploratory analysis and app development

Notebooks as the foundation

Let’s start from the bottom and work our way to the top of the pyramid that is Deepnote’s infrastructure organization.

A culture of collaboration

Everyone at Deepnote, no matter their title, experience, or background, has access to Deepnote to not only fundamentally understand the product they’re contributing to, but also to use it for their workflows—whether they’re an engineer or a sales representative.

Two features that allow everyone in the company to get the most out of Deepnote for their specific roles are workspaces and Deepnote AI.

Workspaces act as a “control panel” by allowing individual teams to control which people have access to what projects. In this way, everyone at Deepnote can organize and customize their own workflows to their specific function.

Deepnote AI boosts the efficiency of not only our engineering teams, but also our non-technical team members. In every single notebook used by our teams, Deepnote AI is available to assist in generating code suggestions to accomplish any plain-English task it receives. Bonus: it’s even smarter in its suggestions when it leverages the context from existing code in these notebooks.

However, one team that does not benefit from Deepnote workspaces and AI is the dedicated data team. And this is because we don’t have a dedicated data team. Why? Because with the access to data resources achieved from workspaces and the data workflow efficiency achieved from our AI features, Deepnote serves as the self-serve data tool that enables everyone to be their own data analyst, effectively turning the whole company into a data team.

Notebooks as a knowledge base

At the next level in our pyramid of needs is a shared knowledge base of our data assets. This kind of knowledge base is normally achieved using data lineage and data catalogs, which help organizations discover and map data flowing through data pipelines. It’s essential in processes like creating analyses for your stakeholders and developing machine learning algorithms.

It turns out, cloud-based notebooks conveniently already provide data lineage and catalog capabilities natively. All notebooks in a given workspace are centralized in a top-down organization that acts as a database of knowledge, where our teams can easily browse and search for pieces of knowledge from teammates’ projects, and also find helpful project-level metadata such as location, machine status, author, and more. So even though our own knowledge base is scattered across thousands of notebooks, everything is neatly catalogued and searchable within organized workspaces.

What’s more, the organization structure of all notebooks in our workspaces is catalogued via notebook dependencies, which allows us to always know where our data is coming from. Maybe even more importantly, it also allows us to keep consistent metrics definitions across all analyses.

For example, our Customer 360 notebook uses definitions of users, teams and workspaces that were derived in upstream notebooks, such as the dedicated users table notebook, explained in more detail below. The linkages and references between notebooks are then automatically tracked as hyperlinks in a convenient Dependencies section, enabling future users to have an immediate understanding of how the analysis was derived as soon as they jump into the notebook.

To access this shared knowledge base—instead of forcing teams to hunt for and share static notebooks in GitHub or god forbid, local machines—team members can immediately collaborate on projects together with the same knowledge of data sources, environment configurations, and target outputs.

Documentation of our cloud warehouse schemas, tables, columns and changes to them is another capability that comes naturally using Deepnote notebooks, thanks to its built-in rich text editor and schema browser. Take our universal users table, for example. We have a notebook dedicated to describing in depth the table’s data, metadata and its business context in detail. It filters out inactive and duplicate accounts, and serves as our source of truth for the definition of a user for all downstream analyses.

Lastly, this knowledge base is critical for providing the context that our AI needs to generate its code suggestions. For example, when our Product Managers need to analyze paying user behavior in Deepnote Apps, they can rely on Deepnote AI to pull in the correct definitions of a user, payment behavior and App usage. But they can also count on the AI to generate a correct, efficient SQL query to join all of those definitions together. By leveraging the work and data knowledge base across your workspaces, Deepnote AI has the same knowledge you have to work by your side as a true autonomous collaborator.

Secure foundations

Deepnote is the foundation, not just for our knowledge base, but also for our security model.

Shared workspaces and granular role-based access controls of Deepnote notebooks make our data sharing practices secure and simple. We can easily control which team members get what access to notebooks and apps by sharing role-based invite links, which eliminate the need for downloading CSV files and screenshots to local machines.

Additionally, two-factor authentication, enforced through Google OAuth, allows us to verify users trying to access our internal accounts while also keeping sensitive customer data private.

We also create secure engineering environments by organizing our work into two main workspaces.

The first workspace has access to all production databases and access is limited to only a small number of Deepnote employees with security reviews.

- Everything in this workspace is automatically audited and versioned, from SQL queries to Python scripts to terminal commands.

- This workspace contains apps that need full access to our production database, such as an app for upgrading our customers to different plans, which also need to be shared with other team members (e.g. a customer support team). These apps are created by team members who are authorized to write production code, and are shared as read-only (allowing executions) with other team members.

- As a nice benefit of working in a notebook, every execution of the app is audited and every change is versioned. One of the apps is a data transformation notebook, scheduled to run every hour, that reads data from our production database, strips it of confidential information, and writes it into our analytics warehouse to be used in our second workspace - our analytics workspace.

This analytics workspace is dedicated to traditional analytical and exploratory work, and the data comes from read-only data warehouses and connections.

- This workspace is available to every team member, allowing everyone to run their analyses and understand the downstream impact of their work (e.g. product managers understanding the impact of their onboarding flow experiment on overall user activation).

All of these safeguards and practices have helped Deepnote teams experiment, collaborate and iterate rapidly without sacrificing security.

Notebooks for data orchestration

If you work at a place anything like Deepnote, your data is often scattered in its sources and siloed in its destinations. Many in our position would have turned to dedicated data orchestration tools to organize their fragmented data pipelines. However, we saw the opportunity to build this capability elegantly within notebooks.

Data orchestration happens in three steps: organization, transformation, and activation. Let’s walk through how Deepnote teams use notebooks to perform these phases in order to automate data workflows.

Organization. We gather data coming from various sources—e.g. billing data from Stripe, product usage data from Segment, community data from social media feeds—inside of our production warehouse using BigQuery. Users can connect notebooks to this organized repository of data to then start the transformation step.

Transformation. Data from disparate sources must be transformed into a standardized format. We found that this step, normally done using tools like dbt, made a lot more sense when done in the same place where downstream data applications like analyses, visualizations and machine learning models were happening—the notebook. By being able to document and track transformation logic in the same document where we’re performing analyses, downstream users have immediate insight into where the data they’re using came from and how it got there.

But there are also cases where we create transformations in one notebook and use the transformed data in other places. One example of this is how we handle our daily billing data. Our raw billing data from Stripe is denormalized on a daily basis using a notebook that is dedicated to this transformation and is scheduled to run everyday. The result is then uploaded into our warehouse as a transformed table. We can then access this data downstream in a much more performant way. For example, in notebooks from a different workspace (like our analytics workspace).

However, whenever we have to work across multiple workspaces, we lose the lineage information — it’s no longer possible to link the data from the final dashboard all the way back to its source. This is something we are currently working on in Deepnote as a product, and soon will be able to track the lineage also across workspaces.

Activation. This step involves actually making the cleaned and consolidated data usable by delivering it to downstream tools. However, since activation of this transformed data is happening in the same place where the actual transforming is happening—again, the notebook—the actual delivery is transparent and instantaneous. This is yet another advantage of doing everything in notebooks. By only activating the transformed data that is required for the task at hand, on-demand if you will, we avoid common problems like dbt models that are used for one-off dashboards and never again.

A good example of the benefits we experienced from using notebook-based data activation is how we replaced an external customer churn prediction tool with just one notebook. This tool previously received data from Segment, which pulls from our product-events warehouse hosted in Google BigQuery—all of which could be done natively in Deepnote. The result was saved time and money that was spent on previously maintaining yet another data tool.

Essentially, activation is being able to use data to build things or learn and share insights. Which brings us to the top of our figurative pyramid.

Notebooks for insights

Business intelligence

Traditional BI tools are built for spending hours on a dashboard that no one will touch again. Deepnote is built for iterative exploration, experimentation, and collaboration with your stakeholders from beginning to end of an analysis.

We’ve never had the need for a separate BI tool because we can aggregate, summarize and present insights all within Deepnote. With the unique combination of code, text and visualization blocks all in one place, notebooks allow us to not only create more advanced dashboards, but also tell the story behind our data to stakeholders in a detailed, transparent way that purely visual BI tools can’t.

For example, we’re able to hold board meetings in Deepnote thanks to the ability to not only visually present our most important business metrics. But we’re also able to show the source and logic behind how the metrics were derived via Python and SQL blocks, while adding style and structure to the narrative using customized text blocks.

Our projects require collaboration across multiple teams and engagement from stakeholders early on and often, not just when the final product is ready to be delivered. This is yet another reason we work in Deepnote instead of traditional BI tools, which are only shareable after you’ve spent several weeks gathering requirements, assembling all the requested charts and assembling them into a stale, clunky dashboard.

Check out some examples of our most common BI use cases:

Customer 360

Our customer team is one that needs to be equipped with the most up-to-date data on account activity so that they can quickly respond to customer requests, prepare for customer meetings, and intervene when accounts are at risk of churning. The sales team had this information scattered across multiple external tools in their sales stack. Consolidation was in order.

The result was our most frequently used tool for everyone at the company, not just our sales and customer support teams. This notebook allows us to quickly pull up any customer and see information on their workspace details, billing details, recent support tickets, activity levels, growth in usage, seats used vs paid for, as well as lists of their most highly used projects and apps—all in one view.

Monitoring server costs

Keeping tabs on our AWS server costs is an important part of our engineers’ day-to-days, obviously, since we’re a product 100% hosted in the cloud. AWS of course has plenty of tools customers can use to monitor usage over time and estimate future spend in the platform. But we needed more.

Not only did we need to report on those numbers segmented by various internal definitions of our user groups, but we also needed to run analyses that were entirely unavailable in AWS, such as measuring customer acquisition costs (CAC) and customer lifetime value (LTV). Enriching these calculations with data from other sources like our production warehouse in BigQuery and our user database in Postgres was the only way we could map AWS’ out-of-the-box metrics to our business’ north star metrics.

Deepnote notebooks were the intuitive place to do that because of the BigQuery and Amazon S3 integrations and the ability to maintain consistent definitions of the business metrics we care about throughout our notebook-powered knowledge base.

Check out this sample AWS cost analysis notebook.

Exploratory analysis

Exploratory programming is a unique need for data teams and is what separates them from other technical teams like software engineers. Data teams often spend a lot of their time trying to experiment and understand the problem space well before being able to arrive at their insights or build any model, and our own teams at Deepnote are no exception.

By providing a single place to iteratively query, code, visualize and contextualize data, Deepnote serves as the only tool that fits into all of our teams’ workflows for troubleshooting, discovering, experimenting, you name it. Not to mention, using AI to do all of the above makes Deepnote the intuitive choice for fast exploratory programming.

Evaluating experiments

Implementation of all of our experiments is done through Amplitude, where we have the ability to create feature flags, define our experiment goals and inputs, and deploy the test. But we found monitoring of the experiments while they’re in flight is best done in Deepnote, where notebooks dedicated to each experiment display the goal metric and traffic split over time, and also allow our teams to perform ad hoc calculations on the data as it comes in.

For example, in our experimentation of adding prompts for credit card information and exposure to a “Getting started” module in our onboarding flow, we needed user and billing data to be joined in so that we had the whole picture on who was converting and what they were purchasing as a result of being exposed to the onboarding flow variant.

Check out this sample AB testing notebook.

Performance issue debugging

Faster notebook loading times was a highly requested fix identified in this app, so our engineers set out on the quest to eliminate dreaded loading circles and “Initializing…” messages throughout the product.

But to do that, our engineers had to have a thorough understanding of what typical load times looked like, how they’ve changed over time and the specific projects and customers most affected by the issue—all questions that needed to be explored before any solution could be deployed.

Apps

Apps are the eyes into the soul of our systems. We designed apps to be the polished, presentation-ready version of a notebook that you can easily share with business stakeholders. But in our own use of Deepnote apps and stretching its limits, we discovered that apps are destined for so much more.

Here are some examples of apps our teams use every day:

Customer data privacy

When we receive a request to erase all personal data, we must delete or anonymize their data within 30 days from all of our databases, tools and internal systems. That’s a lot of different places we’d have to manually expunge one-by-one, if we didn’t have Deepnote.

Using our ‘Delete a user’ notebook, someone from the customer team can simply plug in the requester’s email, run the notebook, and the subsequent blocks each containing an API request to delete the user from all of our individual tools will be executed.

This notebook also includes a documentation layer, where all deletion requests are automatically appended to a list that serves as an audit log.

North star metrics

How do you get to your north star metrics? By taking the Polar Express of course!

The Polar Express is an app we invented using nothing but a Deepnote notebook. This app sends daily updates of our most important metrics to our company’s #general channel in Slack, which helps us keep everyone’s eyes on the prize.

For us, that prize is increasing the number of paying customers and ARR, which are north star metrics tracked on a daily basis through Stripe’s API. This allows us to bring in the relevant billing metrics and assemble them into a message that then gets sent to our #general channel everyday using the Slack API—all done in one notebook in Deepnote.

Abuse detection

All Deepnote notebooks run on machines in the cloud, which means compute is provided even to free account users. As a result, we unfortunately attract users who abuse notebook compute for crypto mining, video streaming, scamming and the likes (don’t get any ideas!)

Rather than having to evaluate the activity of and potentially suspect each and every suspicious account manually, our platform team has built out the logic to systematically do this from just a handful of notebooks that are automatically scheduled to run everyday.

This set of notebooks evaluates all accounts to flag potential instances of suspicious activity based on attributes like IP addresses, domains, processes and even the contents of the blocks in a notebook, which automatically sends our security team email alerts if an account or activity is pinpointed. The team can then swiftly suspend perpetrators’ access to all projects in Deepnote.

Account management

Users coming off of a free trial often come to us asking for more time to try out all of our cool features, and how could we say no to that?

Account management, whether that’s for alerting the sales team if a customer invoice is overdue, upgrading or downgrading plans, adding more seats or hardware hours, is all done through—you guessed it—notebooks.

These notebooks give our customer teams the ability to make changes to accounts simply by inputting information about their workspace, their plan type and the number of extra seats or hours requested, then running the notebook.

Check out this sample notebook used to switch a given user's plan.

Predictive modeling

Deploying machine learning models to production is one of the most common use cases for Deepnote. One of the earliest adopters of Deepnote for machine learning was not a customer, but rather our own internal teams—our sales team, for example.

The combination of a medium that data scientists know and love for developing models (aka notebooks) with a layer for easily deploying those models (aka apps) was exactly what our sales team needed to be able to do things like quickly forecast sales, target customers at risk of churning and provide extra service for customers most likely to expand their contracts.

The beauty of having both the model development and deployment components in Deepnote is that these non-technical team members have full access to the inputs and outputs of these churn and expansion prediction models that help them do their everyday jobs in a data-driven way. Instead of working with a rigid tool, they can tweak the prediction algorithms and do last mile analyses all on their own, while also understanding the code behind what they’ve built thanks to Deepnote AI’s explain and edit capabilities.

Check out this sample Lead scoring notebook.

Not just any notebook, but Deepnote

From business intelligence and security of our infrastructure, to daily messaging and administrative tasks, notebooks are at the heart of it all. We use notebooks for our most complex and simplest use cases because it’s not just any notebook we’re using, but Deepnote.

Deepnote’s native connectors with almost every data source we have allow us to position our notebooks at the center of our own data stack.

Its cloud-based nature and business-user-friendly features such as visualization blocks and apps mean we can bring all team members to the table so that anyone can contribute to any part of our company’s pyramid of needs, regardless of their technical abilities.

In reality, not everyone needs to be involved in all of the many different efforts happening in our company at a given time, and this is where features of Deepnote as a platform—such as access controls and project organization structure—come in to ensure that we’re not just bringing everyone to the table, but rather creating multiple tables where everyone has a seat where they belong.

But at each of those tables we’ve created, each person is equipped with all the tools and organizational understanding they need to help themselves (self-serve, if you will) start being productive as soon as start at the company. Our AI tools, in particular, serve to 10x each member’s contributions as soon as they’re up and running.

Our pyramid structure of needs is not unique to our company, and neither is the ability to use notebooks to address any of the needs individually. Indeed, the whole point of this blog post was to peel back the cover on how notebooks can serve many of these different use cases, so that you can start to understand how to solve your own security, orchestration, or business intelligence problems using one tool instead of a dozen different ones.

But what is unique is Deepnote — none of this would be possible with any other notebook. Its cloud-base nature, and its collaboration and control features make Deepnote notebooks in particular flexible and powerful enough to sit at the center of all of our systems. If you’re looking to simplify and consolidate your infrastructure in the same way, give Deepnote a try.